Transcribing Two Speakers with Amazon Transcribe via Speaker Identification with .NET

Want to learn more about AWS Lambda and .NET? Check out my A Cloud Guru course on ASP.NET Web API and Lambda.

Download full source code.

Last month I wrote a couple of posts about the Amazon Transcribe service. In the first post, I showed how to upload the audio, start the transcription job, poll for completion, and download the JSON results with .NET. The second showed how to use channel identification to identify speakers.

In this post, I will show how to use speaker identification for cases where the speakers are on the same channel.

What I want to end up with is something like this:

00:00:22: spk_0: blah, blah, blah.

00:00:24: spk_1: yada, yada, yada.

00:00:35: spk_0: blah, blah, blah, blah.

00:00:40: spk_1: yada, yada, yada, yada, yada.The Audio File

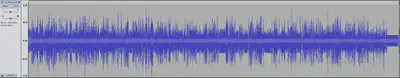

When I finish recording a podcast, I have two audio files, one with what I said, and one with what the guest said. I put these two files together in Audacity, where each file is on a different audio channel. When I finish editing the episode, I merge the two channels into a single mono file. It would not be a pleasant listening experience if you could hear one voice in the right ear, and the other voice in the left.

This is what the audio looks like in Audacity. Everything is on the same channel.

Then I export the audio to an MP3 file.

This is the file I upload to S3, and start the Transcribe job on.

I’m not going to show the code again, you can see it in the earlier post.

But I make one change to the StartTranscriptionJobRequest. I add a Settings object and set the SpeakerIdentification property to true.

var startTranscriptionJobRequest = new StartTranscriptionJobRequest()

{

TranscriptionJobName = transcriptionJobName,

LanguageCode = LanguageCode.EnUS,

Settings = new Settings()

{

SpeakerIdentification = true

},

Media = new Media()

{

MediaFileUri = s3Uri

},

OutputBucketName = bucketName

};The other post also showed how to download the JSON results, do that. You have the JSON and you want to get the transcript with the speakers identified.

Processing the JSON

The code here is not designed to be efficient, it is designed to be easy to understand. If you want to use this in production, I suggest you make it more efficient.

As mentioned above this code assumes you have downloaded the JSON results from S3, that you have a single channel, and that you used speaker identification.

The code uses a Dictionary to store the lines of dialogue, with the start time of the sentence as the key, and the Line object as the value. The line contains, among other things, what was said and the speaker’s label.

The loop iterates through the items, these are the word and punctuation said by each speaker. The loop keeps track of the current speaker and the start time of the current speaker. When the speaker changes or the loop is on the last item, the loop adds the line to the dictionary.It does some other things too, like removing the space before punctuation.

There are probably some edge cases where this code might not be quite right. Again, this is a demo, not production-ready code.

1using System.Text;

2using System.Text.Json;

3using System.Text.Json.Serialization;

4

5var serializationOptions = new JsonSerializerOptions()

6{

7 Converters =

8 {

9 new JsonStringEnumConverter()

10 },

11 NumberHandling = JsonNumberHandling.AllowReadingFromString,

12};

13string jsonString = File.ReadAllText("monotranscription.json");

14

15TranscriptionJob transcriptionJob = JsonSerializer.Deserialize<TranscriptionJob>(jsonString, serializationOptions);

16

17Dictionary<double, Line> dialogue = new Dictionary<double, Line>();

18

19StringBuilder sb = new StringBuilder();

20double speakerStartTime = 0;

21bool lastItem = false;

22string currentSpeaker = string.Empty;

23

24foreach (var item in transcriptionJob.Results.Items)

25{

26 if (currentSpeaker == string.Empty)

27 {

28 speakerStartTime = item.StartTime;

29 currentSpeaker = item.SpeakerLabel;

30 }

31 // change speaker, or last item in the list

32 if (currentSpeaker != item.SpeakerLabel || item == transcriptionJob.Results.Items.Last())

33 {

34 if (item == transcriptionJob.Results.Items.Last())

35 {

36 AddItemToStringBuilder(sb, item);

37 lastItem = true;

38 }

39

40 var line = new Line

41 {

42 Content = sb.ToString(),

43 StartTime = speakerStartTime,

44 SpeakerLabel = currentSpeaker

45 };

46

47 bool resultOfAdd = dialogue.TryAdd(speakerStartTime, line);

48 if (!resultOfAdd)

49 {

50 Console.WriteLine($"Two lines with the same start time: {speakerStartTime}");

51 // change the start time to avoid a duplicate key exception

52 dialogue.TryAdd(speakerStartTime + 0.001, line);

53 }

54

55 if (!lastItem) // new speaker, not last item

56 {

57 speakerStartTime = item.StartTime;

58 currentSpeaker = item.SpeakerLabel;

59 sb.Clear();

60 AddItemToStringBuilder(sb, item);

61 }

62 }

63 else

64 {

65 AddItemToStringBuilder(sb, item);

66 }

67}

68

69void AddItemToStringBuilder(StringBuilder sb, Item item)

70{

71 if (item.AudioType == AudioType.Punctuation)

72 {

73 sb.Remove(sb.Length - 1, 1); // remove space before punctuation

74 sb.Append($"{item.Alternatives[0].Content} ");

75 }

76 else

77 {

78 sb.Append($"{item.Alternatives[0].Content} ");

79 }

80}

81

82foreach (var keyValuePair in dialogue.OrderBy(x => x.Key))

83{

84 var timeSpan = TimeSpan.FromSeconds(keyValuePair.Key);

85 Console.WriteLine($"{timeSpan.ToString(@"hh\:mm\:ss")}: {keyValuePair.Value.SpeakerLabel}: {keyValuePair.Value.Content}");

86}There are a few more classes that are used in the code above, models for the JSON, and the Line class. You can see the complete code in the attached zip.

Download full source code.